Running an autonomous pick and place task

What you need before starting

A robot arm and gripper or hand integrated to GRIP, either through MoveIt!, via a launch file or using external software to control the hardware. You also need to have a sensor integrated to GRIP.

Prerequisites

In order to illustrate the same example both when the robot is integrated through MoveIt! and when one part of the robot is controlled by an external controller, we are going to use a UR5 robot arm with a EZGripper. If you want to replicate this tutorial, please clone this repository, this one and this one /home/user/projects/shadow_robot/base/src. Note that for the simulation of the EZGripper, you might want to clone this repository as well. In this tutorial, we are going to use a Kinect v2, so make sure to install libfreenect2 and to follow these instructions (only for the physical robot). For the grasping algorithm, please clone this repository. Now, compile them:

cd /home/user/projects/shadow_robot/base catkin_make source devel/setup.bash

Simulating a depth sensor

In order to simulate the behavior of depth sensors, this repository contains a Gazebo model that publishes all the topics such sensors would (e.g. RGB image, point cloud, etc.). Make sure to add this model in your .world file (see here for an example).

Autonomous pick and place in simulation

In this section, we are going to run our robot and sensor in simulation. In other words, the whole robot will be operated through MoveIt! (most of the steps are still valid if you can do it with your physical robot!).

Integrate your robot following this tutorial. In our case, we have declared two planners, one for the group

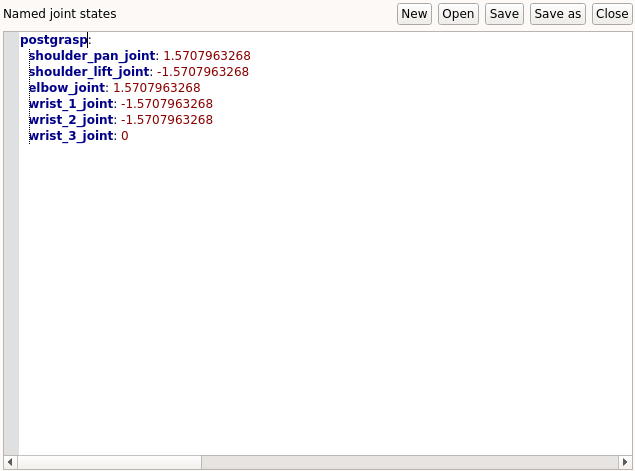

arm_and_manipulatorthat will control the arm and the other one forezgripper_finger_1, that will control the gripper. Don’t forget to add one sensor in the setup’s composition.If needed, define some waypoints (i.e. poses or joint states) your robot should reach during the task. In our case, we define a new joint state corresponding to the pose the robot should go to after grasping an object.

In the

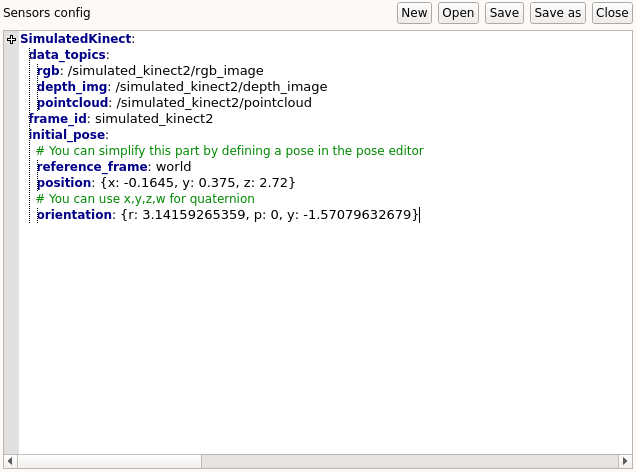

Setup configurationtab, add a new sensor in the corresponding editor (see here for more details). In our case, here is the configuration we used.

In the

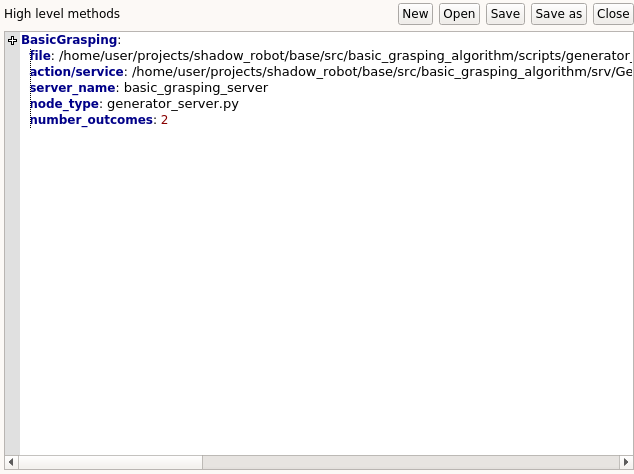

High level componenteditor, please add the grasping algorithm you want to run. Make sure that it follows the expected format. For the sake of simplicity, we are going to run a very simple algorithm. Its code can be found here.

Launch the robot (you can either click on the

Launch robotbutton or use the shortcutCtrl+l)In order to spawn an object in the simulation environment, you can run the launch file we provide (in another terminal), which takes care of collisions and adding it to the MoveIt! scene

roslaunch grip_api manage_object.launch object_type:=rectangular_box object_position:="0 0.45 0.86" object_rpy_orientation:="0 0 0.28"

In the

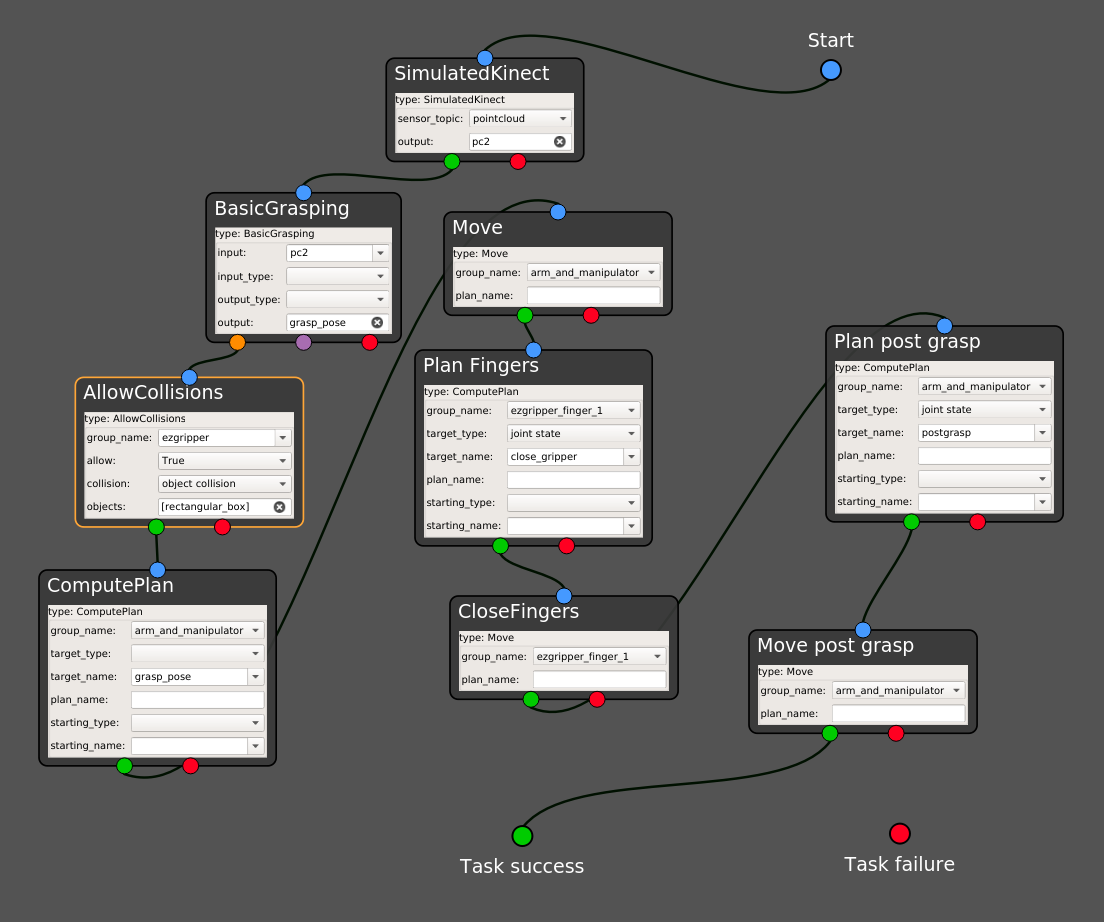

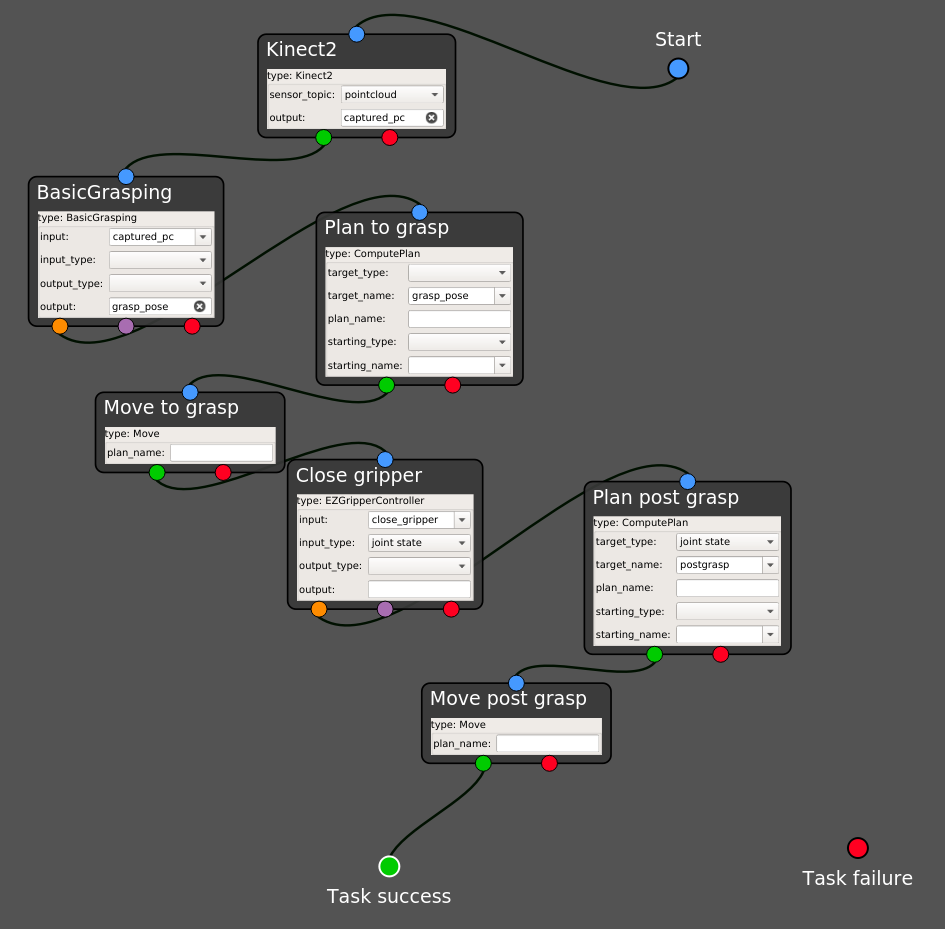

Task editortab, you should see a set of states ready to be used (among them, one with the name of your sensor). You can drag and drop them into the editor area.Configure each state according to what you want to implement. Note that you can still take the most of the dropdown lists in the generated states!

Right click and select

Execute. A window is going to prompt you for the name you want to give to the task. Once the new name provided, you should see the robot autonomously picking the object and going to the pose you defined!

Note

You might observe unrealistic behaviors when the gripper is in contact with the object (e.g. object slipping or even flying). You can partially improve this by tuning the physics parameters and change the controller you use in simulation. However, the main purpose of the simulation mode of GRIP is to make sure that the workflow works, not to simulate realistic contact interaction.

Autonomous pick and place with MoveIt! and an external controller

In this section, we are going to carry out exactly the same task as in the previous section, but with our physical robot. As a result, we are going to use MoveIt! to control our robot arm and an external controller wrapped into a ROS action to operate the gripper.

Integrate your robot following this and this tutorial. In our case, we keep the same MoveIt! configuration package but only register one MoveIt! planner for the group

arm_and_manipulator. In theHand configurationtab, we use the following configuration for theExternal controllereditor:

EZGripperController:

file: /home/user/projects/shadow_robot/base/src/EZGripper/ezgripper_driver/controllers/joint_state_controller.py

action/service: /home/user/projects/shadow_robot/base/src/EZGripper/ezgripper_driver/action/JointStateGripper.action

server_name: joint_state_ezgripper_controller

node_type: joint_state_controller.py

number_outcomes: 2

Define your joint states and/or poses that correspond to where the robot should move during the task. In our case, we use exactly the same as the previous section.

In the

Setup configurationtab, add a new sensor in the corresponding editor (see here for more details). In our case, here is the configuration we used.

In the

High level componenteditor, add the grasping algorithm you want to run (we will use exactly the same configuration as above).Launch the robot (you can either click on the

Launch robotbutton or use the shortcutCtrl+l)In the

Task editortab, you should see a set of states ready to be used. You can drag and drop them into the editor area.

Make sure all the sockets are properly connected. Note that to connect all the remaining sockets to the

Task failure, you can use your right click and selectConnect free sockets.Right click and select

Execute. A window is going to prompt you for the name you want to give to the task. Once the new name provided, you should see your robot executing autonomous picking.

Note

You don’t have to use MoveIt! at all if you have your own controller and planner for the robot arm. The steps are mostly the same, except that you won’t have the states ComputePlan and Move but the generated one running your own code!